Information Theory | Kullback-Leibler Divergence

概述 KL 散度(Kullback-Leibler Divergence),也称为相对熵 (Relative Entropy),是信息论和统计学中用于衡量两个概率分布差异的重要度量。它在机器学习、深度学习、变分推断等领域有广泛应用。

数学定义 离散概率分布 对于离散概率分布 $P$ 和 $Q$,KL 散度定义为:

$$D_{KL}(P||Q) = \sum_{x} P(x) \log\frac{P(x)}{Q(x)}$$

连续概率分布 对于连续概率分布,KL 散度定义为:

$$D_{KL}(P||Q) = \int p(x) \log\frac{p(x)}{q(x)} dx$$

其中:

$P$ 是真实分布(或目标分布)

$Q$ 是近似分布(或模型分布)

$\log$ 通常使用自然对数(以 $e$ 为底),单位为 nats;使用以 2 为底的对数时,单位为 bits

核心性质 1. 非负性 $$D_{KL}(P||Q) \geq 0$$

当��仅当 $P = Q$ 时,等号成立(即 $D_{KL}(P||Q) = 0$)。

2. 非对称性 $$D_{KL}(P||Q) \neq D_{KL}(Q||P)$$

这意味着 KL 散度不是真正的距离度量 ,因为它不满足对称性。

3. 不满足三角不等式 KL 散度不满足三角不等式:

$$D_{KL}(P||R) \not\leq D_{KL}(P||Q) + D_{KL}(Q||R)$$

因此,KL 散度不是度量空间中的距离函数。

4. 凸性 KL 散度关于第一个参数 $P$ 是凸函数。

直观理解 信息论角度 KL 散度表示:用分布 $Q$ 来编码分布 $P$ 的样本时,相比用 $P$ 自身编码所需的额外信息量 (以比特或 nats 为单位)。

如果 $Q$ 与 $P$ 完全相同,则不需要额外信息,$D_{KL}(P||Q) = 0$

如果 $Q$ 与 $P$ 差异很大,则需要更多额外信息来补偿编码效率的损失

统计学角度 KL 散度衡量分布 $Q$ 对分布 $P$ 的近似程度 :

值越小,$Q$ 越接近 $P$

值越大,$Q$ 与 $P$ 的差异越大

机器学习角度 在机器学习中,通常:

$P$ 是真实数据分布(未知但可以从数据中采样)

$Q$ 是模型学习的分布(由参数 $\theta$ 决定)

最小化 $D_{KL}(P||Q)$ 等价于让模型分布尽可能接近真实分布

数学推导 1. 从信息熵推导 信息熵(Entropy) 分布 $P$ 的信息熵定义为:

$$H(P) = -\sum_{x} P(x) \log P(x)$$

它表示编码分布 $P$ 的样本所需的平均最小比特数 。

交叉熵(Cross Entropy) 用分布 $Q$ 来编码分布 $P$ 的样本所需的平均比特数:

$$H(P, Q) = -\sum_{x} P(x) \log Q(x)$$

KL 散度 = 交叉熵 - 信息熵 $$\begin{align}

这表明 KL 散度是使用次优编码方案 $Q$ 相比最优编码方案 $P$ 所需的额外信息量 。

2. 非负性证明(Gibbs 不等式) 我们使用 Jensen 不等式 来证明 KL 散度的非负性。

Jensen 不等式 对于凸函数 $f(x)$ 和概率分布 $P$:

$$f\left(\sum_{x} P(x) \cdot x\right) \leq \sum_{x} P(x) \cdot f(x)$$

对于凹函数(如 $\log$),不等号反向。

证明过程 由于 $f(x) = -\log(x)$ 是凸函数,我们有:

$$\begin{align}

等号成立当且仅当 $\frac{Q(x)}{P(x)}$ 为常数,即 $P(x) = Q(x)$ 对所有 $x$ 成立。

结论 :$D_{KL}(P||Q) \geq 0$,且仅当 $P = Q$ 时等号成立。

3. 与最大似然估计的关系 在机器学习中,我们通常希望找到参数 $\theta$ 使得模型分布 $P_\theta$ 尽可能接近真实数据分布 $P_{data}$。

最小化 KL 散度 $$\begin{align}

经验分布近似 在实践中,我们无法直接访问 $P_{data}$,但可以从数据集 ${x_1, x_2, \ldots, x_N}$ 中采样。使用经验分布:

代入上式:

$$\max_\theta \sum_{x} P_{data}(x) \log P_\theta(x) \approx \max_\theta \frac{1}{N} \sum_{i=1}^{N} \log P_\theta(x_i)$$

这正是最大似然估计(Maximum Likelihood Estimation, MLE)的目标函数!

实际计算示例 示例 1:离散分布 假设真实分布 $P = [0.5, 0.3, 0.2]$,近似分布 $Q = [0.4, 0.4, 0.2]$。

计算 $D_{KL}(P||Q)$:

$$\begin{align}

转换为 bits(除以 $\ln 2 \approx 0.693$):

$$D_{KL}(P||Q) \approx 0.0365 \text{ bits}$$

示例 2:高斯分布 对于两个一维高斯分布:

$P = \mathcal{N}(\mu_1, \sigma_1^2)$

$Q = \mathcal{N}(\mu_2, \sigma_2^2)$

KL 散度的闭式解为:

$$D_{KL}(P||Q) = \log\frac{\sigma_2}{\sigma_1} + \frac{\sigma_1^2 + (\mu_1 - \mu_2)^2}{2\sigma_2^2} - \frac{1}{2}$$

特殊情况 :当 $Q = \mathcal{N}(0, 1)$(标准正态分布)时:

$$D_{KL}(P||Q) = \frac{1}{2}\left(\mu_1^2 + \sigma_1^2 - \log\sigma_1^2 - 1\right)$$

这个公式在 VAE(变分自编码器)中被广泛使用。

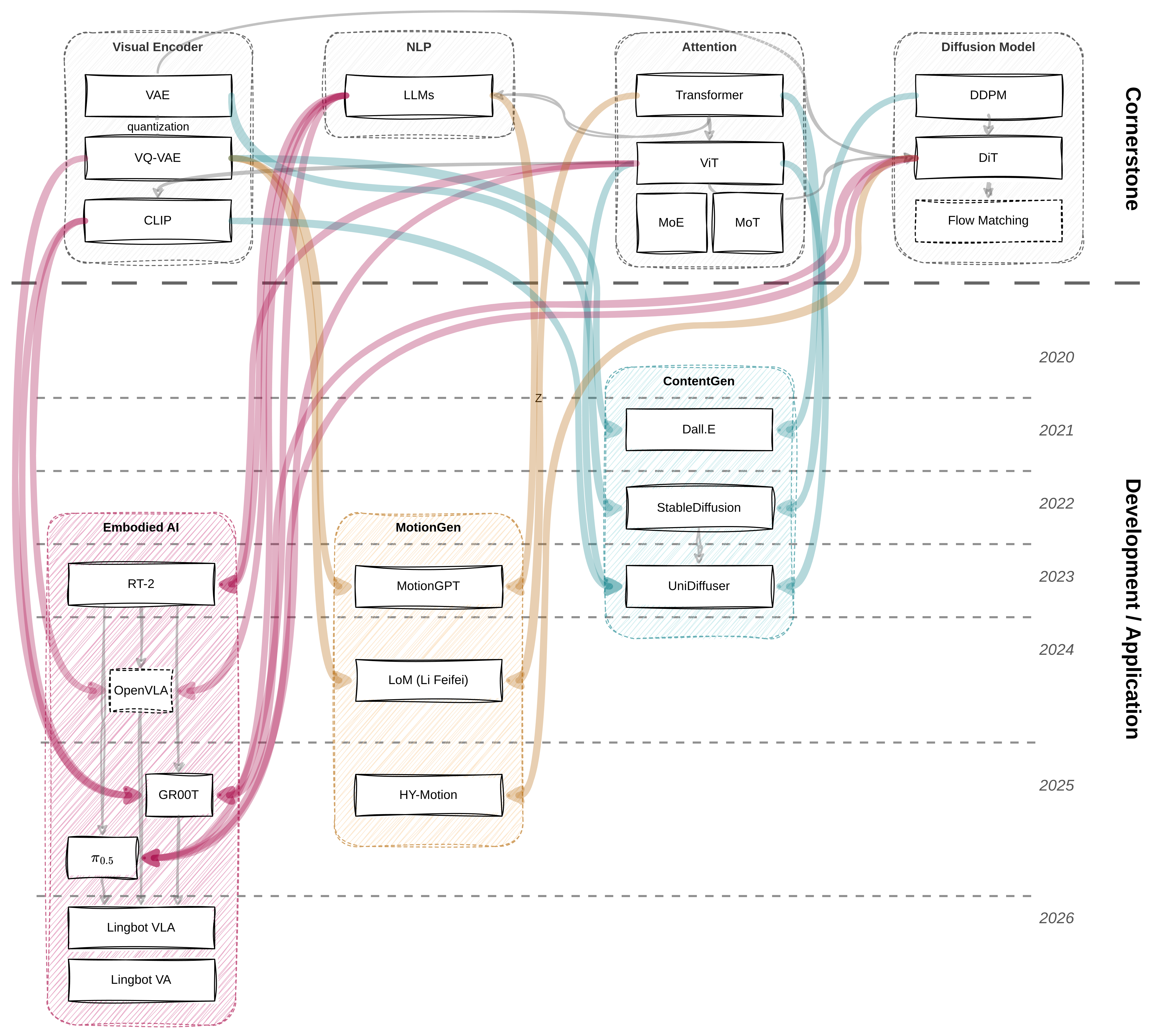

机器学习中的应用 1. 变分推断(Variational Inference) 在贝叶斯推断中,我们希望计算后验分布 $P(\theta|X)$,但通常难以直接计算。变分推断使用一个简单的分布 $Q(\theta)$ 来近似:

2. 变分自编码器(VAE) VAE 的损失函数包含 KL 散度项,用于正则化潜在空间:

$$\mathcal{L} = \mathbb{E}{q(z|x)}[\log p(x|z)] - D {KL}(q(z|x)||p(z))$$

其中:

第一项是重建损失

第二项是 KL 散度,约束编码器输出接近先验分布

3. 策略优化(强化学习) 在强化学习中,KL 散度用于约束策略更新的幅度:{\pi_\theta}[R] \quad \text{s.t.} \quad D {KL}(\pi_{old}||\pi_\theta) \leq \delta$$

这是 TRPO(Trust Region Policy Optimization)和 PPO(Proximal Policy Optimization)的核心思想。

4. 模型蒸馏(Knowledge Distillation) 使用 KL 散度让小模型(学生)学习大模型(教师)的输出分布:

$$\mathcal{L} = D_{KL}(P_{teacher}||P_{student})$$

5. 生成对抗网络(GAN) 虽然 GAN 不直接优化 KL 散度,但理论分析表明,GAN 的目标函数与 JS 散度(Jensen-Shannon Divergence)相关,而 JS 散度是基于 KL 散度定义的:

$$D_{JS}(P||Q) = \frac{1}{2}D_{KL}(P||M) + \frac{1}{2}D_{KL}(Q||M)$$

其中 $M = \frac{1}{2}(P + Q)$。

前向 KL 与反向 KL 前向 KL:$D_{KL}(P||Q)$

含义 :用 $Q$ 近似 $P$特点 :

当 $P(x) > 0$ 但 $Q(x) = 0$ 时,散度为无穷大

要求 $Q$ 覆盖 $P$ 的所有支撑集(zero-avoiding)

$Q$ 倾向于覆盖 $P$ 的所有模式,可能过于分散

反向 KL:$D_{KL}(Q||P)$

含义 :用 $Q$ 近似 $P$(但优化方向相反)特点 :

当 $Q(x) > 0$ 但 $P(x) = 0$ 时,散度为无穷大

要求 $Q$ 集中在 $P$ 的高概率区域(zero-forcing)

$Q$ 倾向于选择 $P$ 的某一个模式,可能过于集中

对比表格

特性

前向 KL $D_{KL}(P||Q)$

反向 KL $D_{KL}(Q||P)$

优化目标 最大似然估计

变分推断

行为 Zero-avoiding(避免零概率)

Zero-forcing(强制零概率)

多模态处理 覆盖所有模式(分散)

选择单一模式(集中)

应用 监督学习、MLE

VAE、变分推断

与其他散度的关系 1. JS 散度(Jensen-Shannon Divergence) JS 散度是 KL 散度的对称化版本:

$$D_{JS}(P||Q) = \frac{1}{2}D_{KL}(P||M) + \frac{1}{2}D_{KL}(Q||M)$$性质 :

对称:$D_{JS}(P||Q) = D_{JS}(Q||P)$

有界:$0 \leq D_{JS}(P||Q) \leq \log 2$

满足三角不等式的平方根

2. Wasserstein 距离 Wasserstein 距离(也称为 Earth Mover’s Distance)是另一种衡量分布差异的度量,在 GAN 中被广泛使用(WGAN)。

Wasserstein 距离是真正的度量(满足对称性和三角不等式)

即使两个分布没有重叠,Wasserstein 距离仍然有意义

KL 散度在分布不重叠时可能为无穷大

3. $\chi^2$ 散度 $$D_{\chi^2}(P||Q) = \sum_{x} \frac{(P(x) - Q(x))^2}{Q(x)}$$

常见问题 问题 1:为什么 KL 散度不对称? 原因 :KL 散度的定义中,$P$ 和 $Q$ 的角色不同:

$$D_{KL}(P||Q) = \sum_{x} P(x) \log\frac{P(x)}{Q(x)}$$

$P$ 作为权重出现在求和中

$Q$ 出现在对数的分母中

交换 $P$ 和 $Q$ 会得到不同的结果。

直观理解 :

$D_{KL}(P||Q)$ 衡量”用 $Q$ 编码 $P$ 的样本”的额外信息

$D_{KL}(Q||P)$ 衡量”用 $P$ 编码 $Q$ 的样本”的额外信息

这两者通常不相等

问题 2:什么时候使用前向 KL,什么时候使用反向 KL? **前向 KL $D_{KL}(P||Q)$**:

当你希望 $Q$ 覆盖 $P$ 的所有模式时

最大似然估计

监督学习

当你希望 $Q$ 集中在 $P$ 的高概率区域时

变分推断

VAE、变分贝叶斯

问题 3:KL 散度可以为负吗? 不可以 。根据 Gibbs 不等式,KL 散度始终非负:

$$D_{KL}(P||Q) \geq 0$$

问题 4:如何处理 $Q(x) = 0$ 的情况? 当 $P(x) > 0$ 但 $Q(x) = 0$ 时,$\log\frac{P(x)}{Q(x)} = \infty$,导致 KL 散度为无穷大。

解决方法 :

拉普拉斯平滑 :给 $Q(x)$ 加一个小的常数 $\epsilon$确保支撑集匹配 :保证 $Q$ 的支撑集包含 $P$ 的支撑集使用其他散度 :如 Wasserstein 距离,对不重叠分布更鲁棒

最佳实践 1. 数值稳定性 在实现 KL 散度时,避免直接计算 $\log\frac{P(x)}{Q(x)}$,而是使用:1 2 3 4 5 kl = P * np.log(P / Q) kl = P * (np.log(P) - np.log(Q))

1 2 3 epsilon = 1e-10 kl = P * (np.log(P + epsilon) - np.log(Q + epsilon))

1 2 3 4 5 6 7 8 9 10 11 import torch.nn.functional as Fkl_loss = F.kl_div(Q.log(), P, reduction='batchmean' ) import tensorflow as tfkl_loss = tf.keras.losses.KLDivergence()(P, Q) from scipy.stats import entropykl_div = entropy(P, Q)

参考资源