Repository:

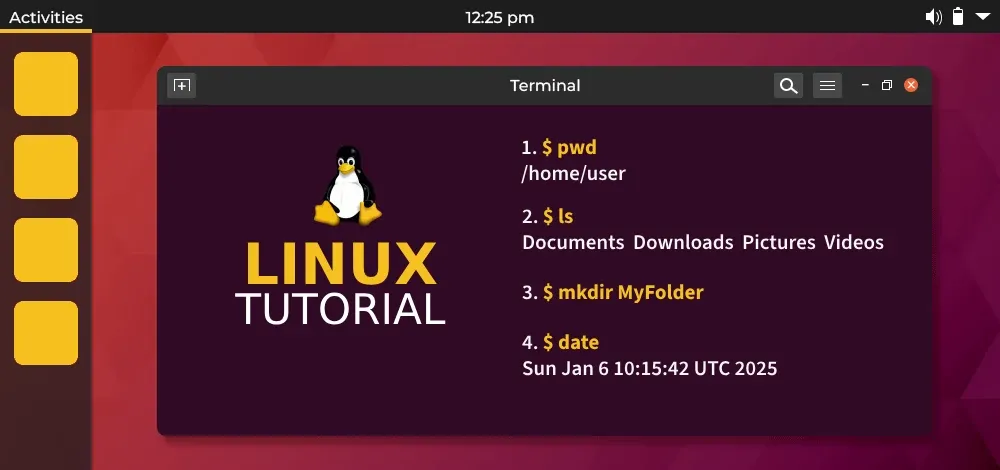

Installation

official:

1 | conda env create -f conda.yaml |

不建议使用official的conda.yaml, 使用更改后的conda_cyl.yaml。

1 | pip install torch==2.0.0 torchvision==0.15.1 torchaudio==2.0.1 |

Demo 🐱

官方提供了 depth estimation 和 segmentation 的 notebook,可以找时间理解一下

Train

使用的数据集为Imagenet-mini

1 | imagenet-mini |

Note: 需要额外添加一个label.txt

使用脚本生产数据集的meta data:

1 |

Deeper Scene Graph For Robots/Pasted_image_20250328103811.png)

Contextual Translation Embedding for Visual Relationship Detection and Scene Graph Generation/Pasted_image_20250318160643.png)