Official repo:https://github.com/liuhengyue/fcsgg https://github.com/PSGBOT/KAF-Generation

My venv: fcsgg

Installation Environment Preparation 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 git clone git@github.com:liuhengyue/fcsgg.git cd fcsgggit submodule init git submodule update conda create --name fcsgg conda create -n fcsgg python=3.10 conda install nvidia/label/cuda-11.8.0::cuda-toolkit -c nvidia/label/cuda-11.8.0 conda install cudatoolkit pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 conda install -c conda-forge gcc=11.2.0 conda install -c conda-forge gxx=11.2.0 conda env config vars set LD_LIBRARY_PATH="/home/cyl/miniconda3/envs/fcsgg/lib/" conda env config vars set CPATH="/home/cyl/miniconda3/envs/fcsgg/include/" conda env config vars set CUDA_HOME="/home/cyl/miniconda3/envs/fcsgg/" conda deactivate conda activate fcsgg export CC=$CONDA_PREFIX /bin/gccexport CXX=$CONDA_PREFIX /bin/g++pip install -r requirements.txt python -m pip install -e detectron2

Downloads Datasets:

1 2 3 4 5 cd ~/Reconstwget https://cs.stanford.edu/people/rak248/VG_100K_2/images.zip -P ./Data/vg/ wget https://cs.stanford.edu/people/rak248/VG_100K_2/images2.zip -P ./Data/vg/ unzip -j ./Data/vg/images.zip -d ./Data/vg/VG_100K unzip -j ./Data/vg/images2.zip -d ./Data/vg/VG_100K

Download the scene graphs and extract them to datasets/vg/VG-SGG-with-attri.h5.

Issues 1 1 AttributeError: module 'PIL.Image' has no attribute 'LINEAR' . Did you mean: 'BILINEAR' ?

LINEAR-> BILINEAR: commit

2 在尝试训练的过程中报错:

1 2 3 File "/home/cyl/Reconst/fcsgg/fcsgg/data/detection_utils.py" , line 432, in generate_score_map masked_fmap = torch.max(masked_fmap, gaussian_mask * k) RuntimeError: The size of tensor a (55) must match the size of tensor b (56) at non-singleton dimension 1

modify detection_utils.py: commit

Training 首先更改训练的配置文件./config/quick_schedules/Quick-FCSGG-HRNet-W32.yaml, (原文件使用预训练的参数)

1 2 3 4 MODEL: META_ARCHITECTURE: "CenterNet" HRNET: WEIGHTS: "output/FasterR-CNN-HR32-3x.pth"

更改为train from scratch

1 2 3 4 MODEL: META_ARCHITECTURE: "CenterNet" HRNET: WEIGHTS: ""

再运行:

1 python tools/train_net.py --num-gpus 1 --config-file configs/quick_schedules/Quick-FCSGG-HRNet-W32.yaml

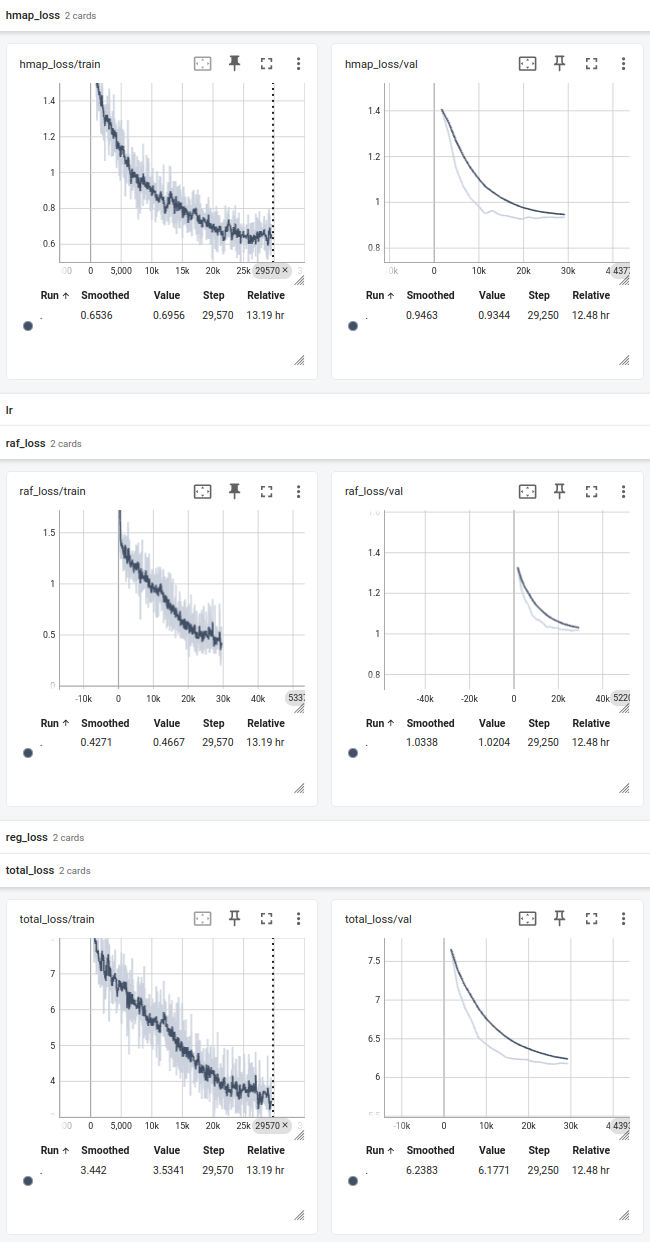

成功训练✌

1 2 3 4 5 6 ... [04/23 10:21:01] d2.utils.events INFO: eta: 0:05:37 iter: 1159 total_loss: 1.042 loss_cls: 0.7593 loss_box_wh: 0.08827 loss_center_reg: 0.02485 loss_raf: 0.1754 time : 0.4042 last_time: 0.4519 data_time: 0.0043 last_data_time: 0.0044 lr: 0.001 max_mem: 4141M [04/23 10:21:09] d2.utils.events INFO: eta: 0:05:29 iter: 1179 total_loss: 1.028 loss_cls: 0.7208 loss_box_wh: 0.09246 loss_center_reg: 0.02669 loss_raf: 0.1625 time : 0.4042 last_time: 0.4035 data_time: 0.0041 last_data_time: 0.0044 lr: 0.001 max_mem: 4141M [04/23 10:21:17] d2.utils.events INFO: eta: 0:05:21 iter: 1199 total_loss: 1.01 loss_cls: 0.671 loss_box_wh: 0.1038 loss_center_reg: 0.02432 loss_raf: 0.1635 time : 0.4042 last_time: 0.3943 data_time: 0.0042 last_data_time: 0.0043 lr: 0.001 max_mem: 4141M [04/23 10:21:25] d2.utils.events INFO: eta: 0:05:13 iter: 1219 total_loss: 0.9737 loss_cls: 0.6887 loss_box_wh: 0.0929 loss_center_reg: 0.02574 loss_raf: 0.1749 time : 0.4041 last_time: 0.4101 data_time: 0.0041 last_data_time: 0.0042 lr: 0.001 max_mem: 4141M ...

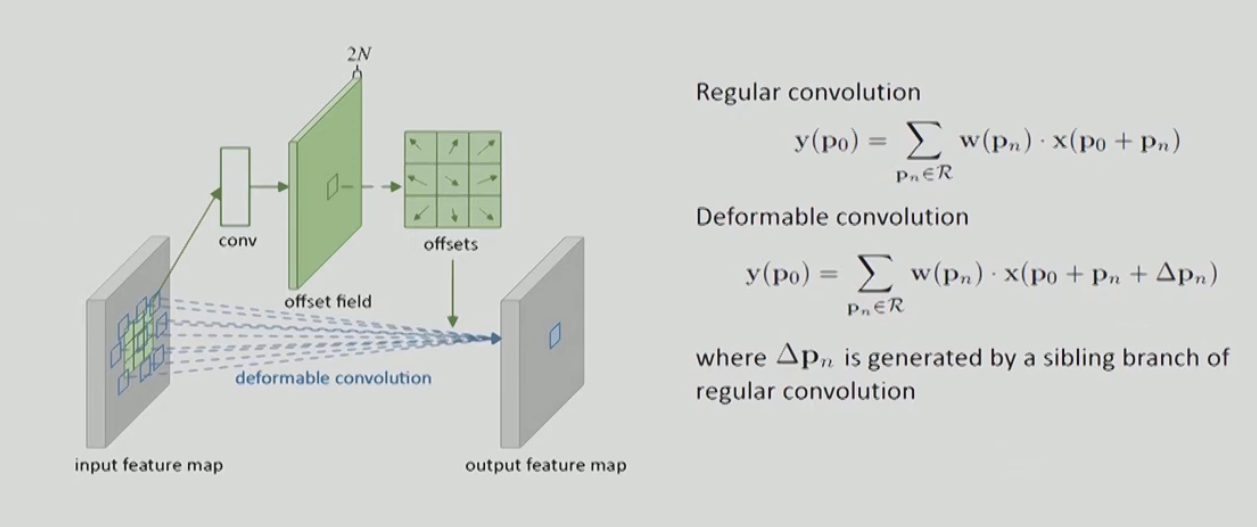

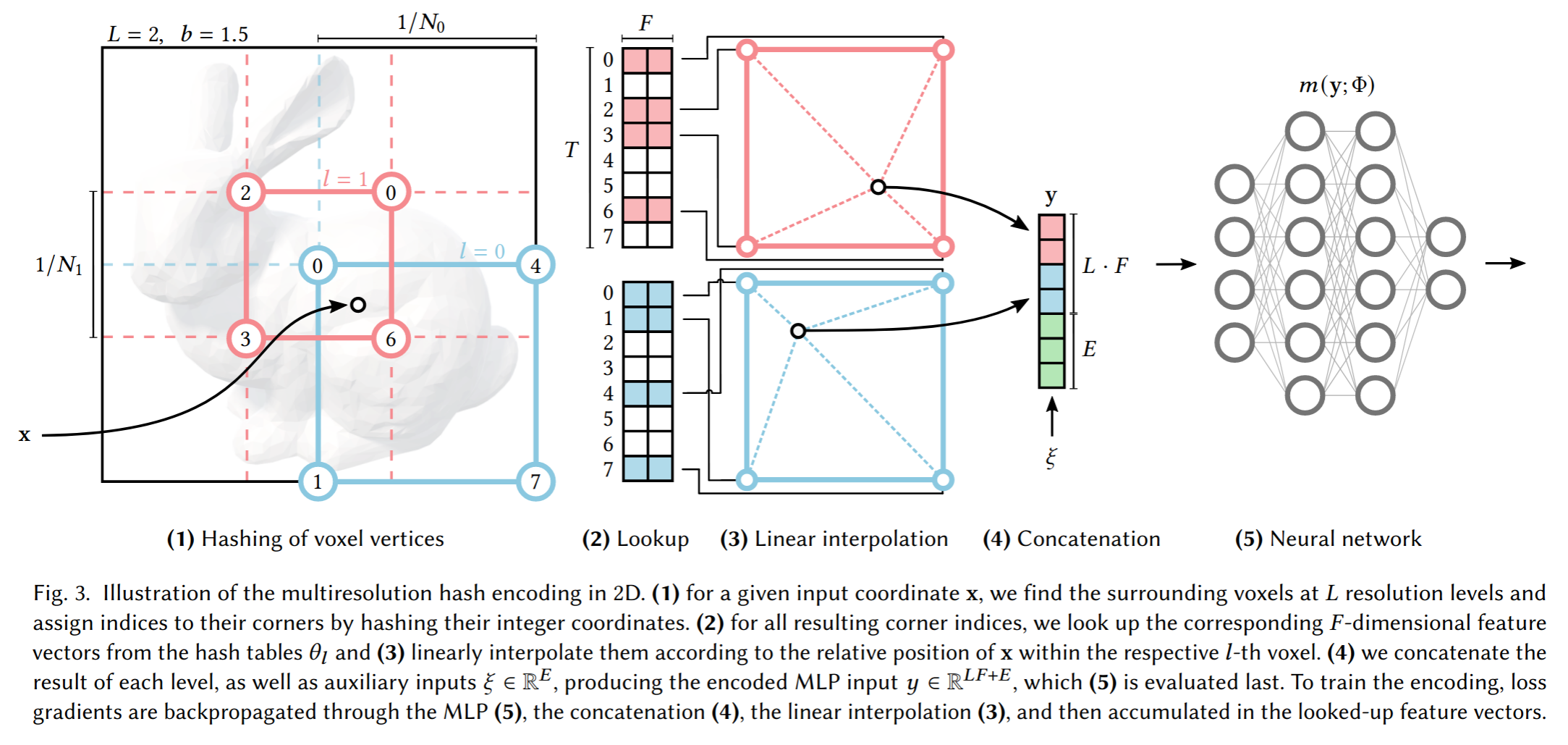

Explanation See [[FCSGG Repo Explanation]]