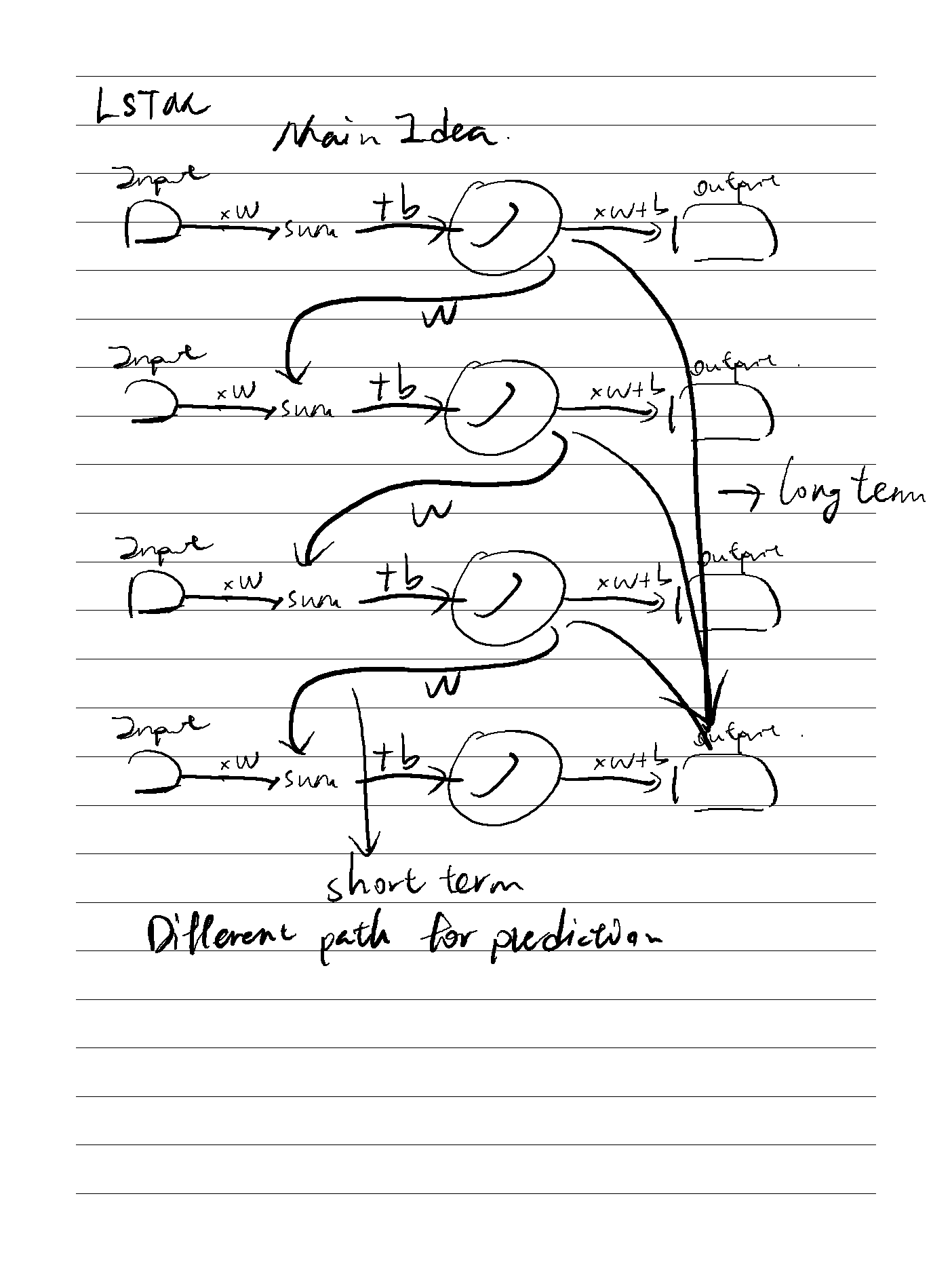

LSTM主要是用于解决递归网络中梯度指数级消失或者梯度爆炸的问题

LSTM主要是用于解决递归网络中梯度指数级消失或者梯度爆炸的问题

On the Properties of Neural Machine Translation= Encoder–Decoder Approaches

对比了 RNN Encoder-Decoder 和 GRU(new proposed)之间的翻译能力,发现GRU更具优势且能够理解语法。

Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling

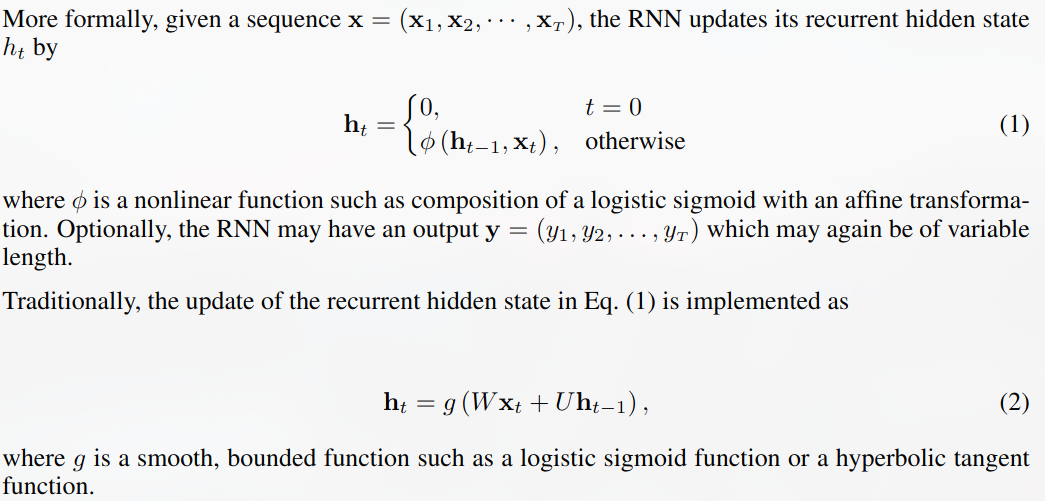

首先介绍了RNN通过hidden state来实现记忆力功能

raw data : for each subjects(S1,S2 …) , each action(walking, waiting, smoking …), each sub sequence(1/2):

$(n) \times 99$ (np.ndarray, float32)

data_utils.load_data() used by translate.read_all_data()train data: the composed dictionary ((suject_id, action, subaction_id, ‘even’) as key) of raw data (just even rows), with one hot encoding columns for action type, if action is specified (normal case), just append an all 1 column to rawdata. Size of each dictionary value:

$(n/2) \times (99 + actions;count)$

complete data: all data joint together, from different subjects, actions, sub sequences:

$(n) \times 99$

translate.read_all_data() used by translate.train()train set : normalized train data, throw out data with $std < 1e-4$ (accroding to complete data). Size of each dictionary value:

$(n/2) \times ((99-used;dimension;count) + actions;count)$

After the analyzztion of the complete data, human dimension has been fixed to $54$.

Seq2SeqModel.get_batch() used by translate.train()total_seq: $60$ ($[0,59]$)

source_seq_len: $50$

target_seq_len: $10$

batch_size: $16$

encoder_inputs: $16\times 49\times (54+actions;count)$

Interpretation: [batch,frame,dimension]

frame range: $[0,48]$

decoder_inputs: $16\times 10\times (54+actions;count)$

frame range: $[49,58]$

decoder_outputs: $16\times 10\times (54+actions;count)$

frame range: $[50,59]$

encoder_inputs: Tensor form of encoder_inputs from Seq2SeqModel.get_batch()

1 | torch.from_numpy(encoder_inputs).float() |

decoder_inputs: Tensor form of decoder_inputs from Seq2SeqModel.get_batch()

For detailed usage, please see [Adopted] human-motion-prediction-pytorch\src\predict.ipynb

The kinect camera’s output is not guaranteed to be consistent with the input of this model (some features are cut off), so further research is needed.

Run pyKinectAzure\examples\exampleBodyTrackingTransformationComparison to get the camera output record in pyKinectAzure\saved_data, saved as .npy