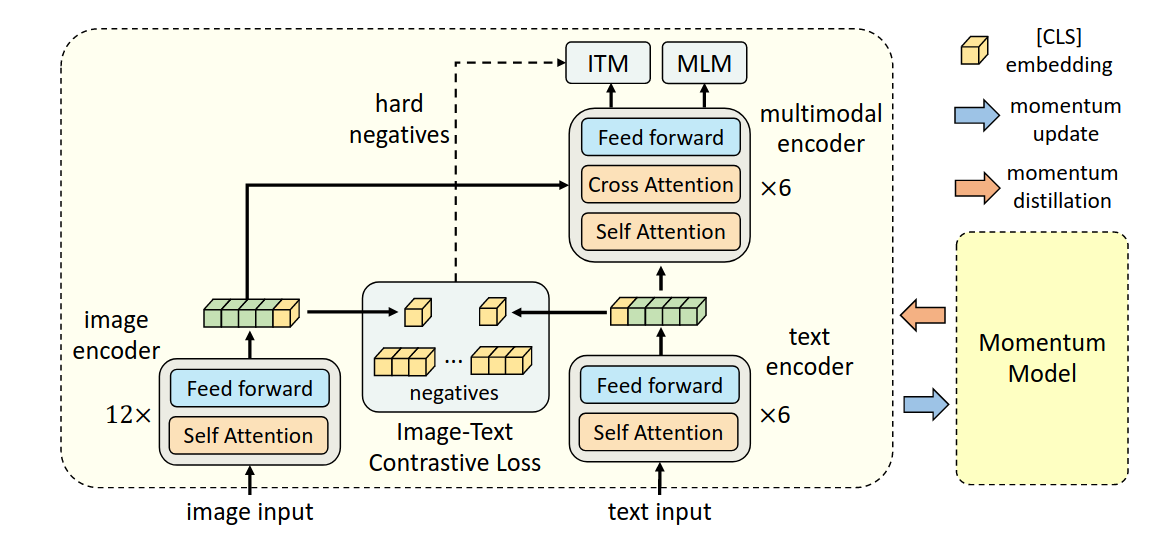

使用的backbone是BERT(通过MLM训练)

该研究认为,image encoder的模型大小应该大于text encoder,所以在text encoder这里,只使用六层self attention来提取特征,剩余六层cross attention用于multi-modal encoder。

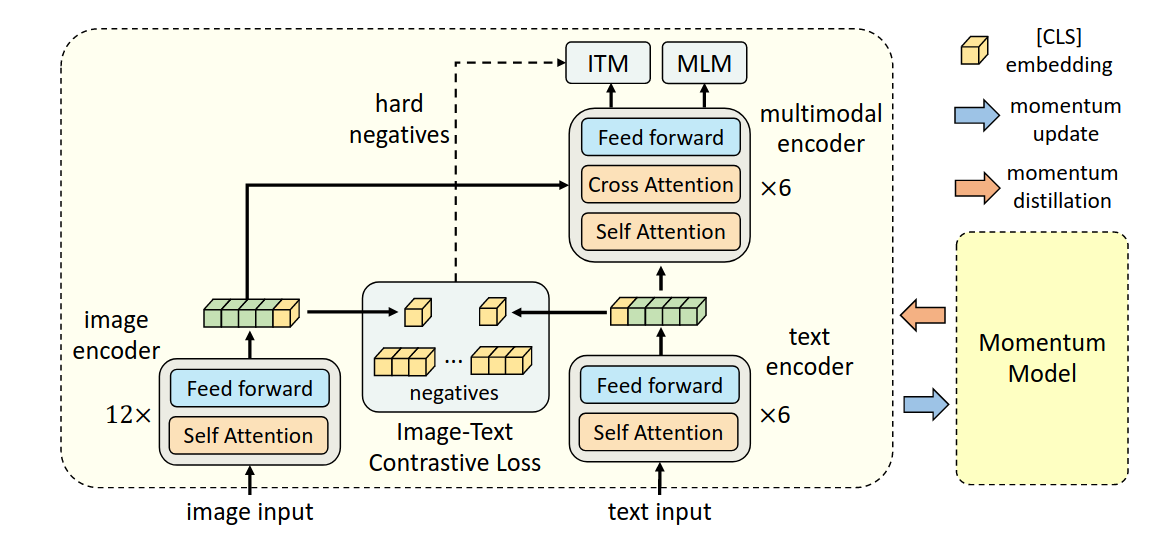

使用的backbone是BERT(通过MLM训练)

该研究认为,image encoder的模型大小应该大于text encoder,所以在text encoder这里,只使用六层self attention来提取特征,剩余六层cross attention用于multi-modal encoder。

A vision-language model that unifies vision-language understanding and generation tasks.

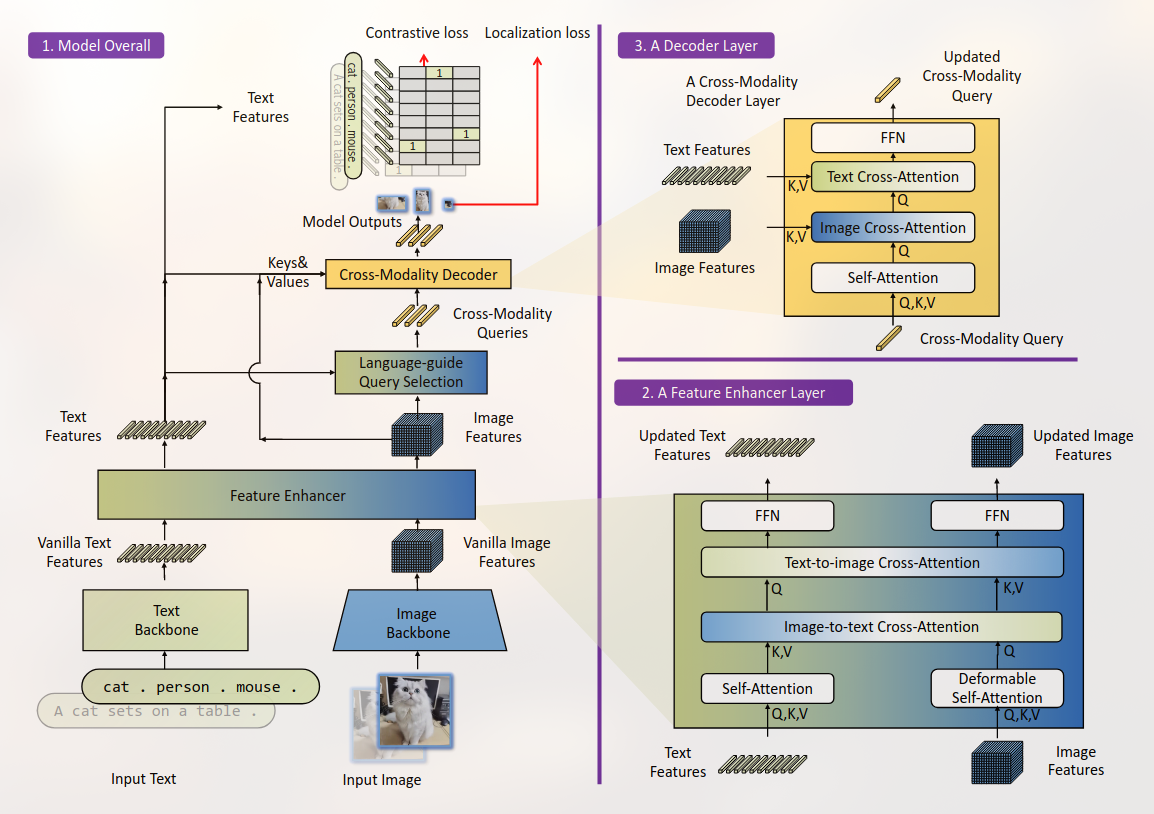

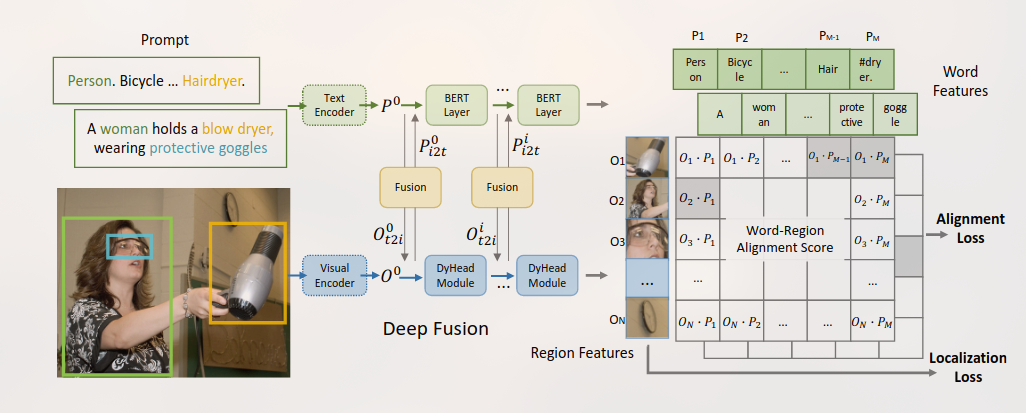

GLIP是一个学习了object-level, language-aware, and semantic-rich visual representations 的模型。

统一对象检测和短语接地进行预训练。

在人机协作的工作环境中,准确地理解与推理工作场景至关重要。传统方法往往依赖静态感知技术,难以处理动态变化的场景信息。随着深度学习和大语言模型的进步,结合场景大模型与知识图谱的多模态推理技术,将为环境理解提供更强的动态感知和智能推理能力。

Docker Proxy 配置 | FuYao Docker 文档

1 | ~/fuyao/my-docker/ |

1 | mkdir -p ~/fuyao/my-docker && cd ~/fuyao/my-docker |

1 | FROM infra-registry-vpc.cn-wulanchabu.cr.aliyuncs.com/data-infra/fuyao:luome-250704-0233 |

1 | # 使用香港节点(可访问 GitHub) |

1 | # 镜像地址格式 |

gsplit_plain nil原因:容器内 Neovim 版本与本地不一致,插件 API 不兼容

解决:Dockerfile 中指定与本地相同的 Neovim 版本

1 | # 查看本地版本 |

解决:确保 mason bin 目录在 PATH 中

1 | ENV PATH="${PATH}:/root/.local/share/nvim/mason/bin" |

--site=fuyao_hk 访问 GitHubCOPY 代替 curl

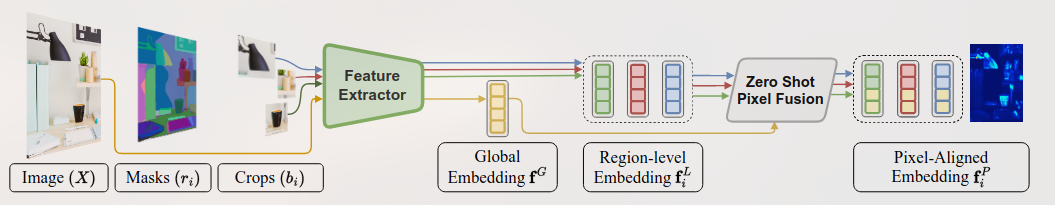

将不同帧$X_t$中的特征集合在M中特征点的公式: